How AI can Build a Better World

Join Matt Celuszak, Founder and CEO of Element Human, as he shares valuable insights on how AI can build a better world through understanding human emotions and behaviors.

In a talk at CrossOver AI, Matt shares insights from his 20 years of experience in people measurement systems, and perspectives on building at the intersection of AI and human experience.

Key Tidbits and Takeaways:

Matt prosecutes 3 topics at the center stage of AI development:

- Individual vs. Universal Approaches

- Universal emotion classification systems often fall short in real-world applications.

- Individual-based approaches yield more accurate results, especially in diverse populations.

- Real-world data is messy and requires robust, adaptable AI systems.

- Open vs. Closed Systems

- Openness in AI development fosters innovation and addresses challenges more effectively.

- Sharing knowledge and fostering discussions leads to better products and stronger client relationships.

- Transparency builds trust and helps navigate complex issues like privacy and consent.

- People vs. Profit

- Ethical considerations should be at the forefront of AI development, even at the cost of short-term profits.

- Privacy and data protection (e.g., GDPR compliance) are crucial for long-term success.

- Saying "no" to ethically questionable projects can lead to more meaningful and impactful work.

Matt discusses several challenges we've encountered in developing human-centric AI:

- Bias in facial recognition systems, particularly with diverse skin tones

- Limitations of universal emotion classifiers

- Privacy concerns and the importance of consent

To tackle those, there's a need for:

- Diverse, real-world training data

- Individual-based emotion recognition systems

- Transparent and ethical AI practices

- Strong data privacy measures

As AI continues to shape our digital world, an empathetic and human-centered approach is more important that ever. By focusing on individual experiences, fostering open collaboration, and prioritizing ethics, we can develop AI systems that truly enhance the quality of life and respect human dignity in our increasingly digital interactions.

The Transcript

CrossOver- AI - How AI can Build a Better World, Matt Celuszak, Founder and CEO, ElementHuman

===

Matt Celuszak: [00:00:00] Thanks, Natalie. I appreciate you inviting us to Crossover AI. I'm calling in from across the pond over in Europe, but I spend half my time here and half the time in Victoria. It's a really, really interesting time for the artificial intelligence field. We've gotten to a point where machines can recognize a lot of things.

And the area that I'm going to focus on today is around recognizing human beings, specifically emotions and behaviors. I come from about a 16 year background in, people measurement systems and I'm trying to understand working with actually Angus Reed before that in the Department of Human Kinetics at the University of British Columbia.

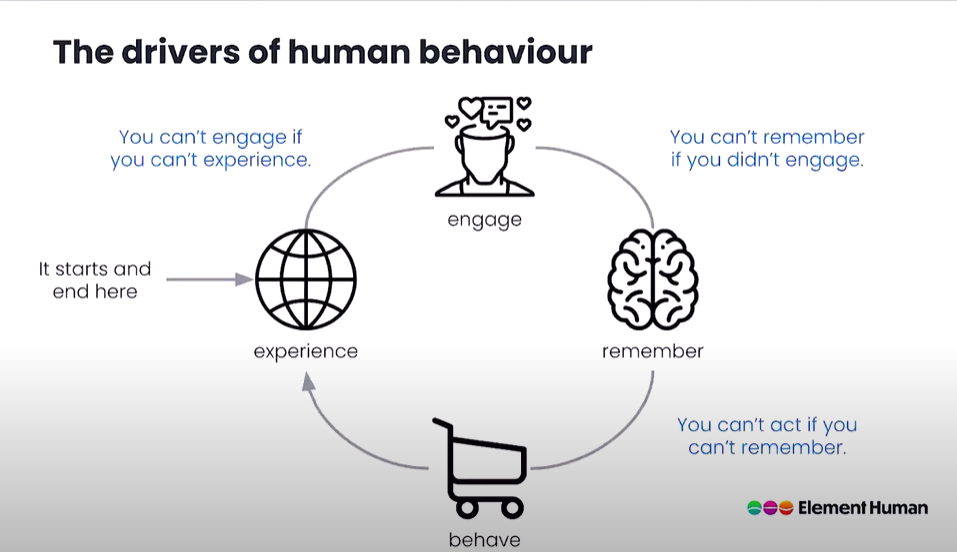

And I'm really, really fascinated with the interaction between people and machines and specifically what can machines understand about us that we don't necessarily pick up ourselves. Or even more importantly, is there ways that machines can serve us in a more empathetic way? And we, we built our business element human under this core belief that emotions and behaviors are the connective tissue between what people experience and what they do.

And today we're going to get into some of the, let's call them hard earned lessons. over the last seven years of working with people data while artificial intelligence was going from support vector machines to CNNs and further on into other areas now and how that's improving the capability to understand people at scale.

and speed of performance data that company is dealing with today. Just to give you a bit of background on who we are and why we should even bother chatting and listening to us. We're a human experience intelligence platform. And I think, I just want to unpack that a little bit. Human experience to us is, is the mechanism of which people interact with other things, be it human beings, be a technology throughout their life.

And the experience starts with them entering an environment. having an experience and leaving the environment to ultimately do something related to that experience. Experiences form our memories, they form our actions and behaviors, they form our perceptions of society, and they're really, really good indicators on how we're going to behave and act and receive the world in the future.

So we built this with an idea of, well, with about seven years ago, and 2. 5 billion data points. We're collecting and activating sensors on devices like webcams to understand where people are looking and how they're feeling. And are they enacting any body language that we, we would normally capture face to face.

But now when people buy online, they're not buying face to face anymore. They're buying through a machine. So how do we understand that? We did it in a safe way and consensual way with over 500 people across 89 countries. I can also tell you that we've learned a few things and we've learned them the hard way.

What's really fascinating for us is just simply about this cycle of the human experience. When you start and enter an experience, let's call this an environment. So this could be a room, this could be a car, this could be a digital experience, and in digital there's all kinds of different ones. There is virtual reality through to simply a website or video.

Right now a lot of companies are moving and migrating into this digital economy, specifically trying to create end to end customer experiences. And in their view, they have to create this experience as a, as a, an attraction, a location for you to go to, to pay attention to something. Once it grabs your attention, you then think about it, hopefully right to memory.

And when you remember it, you act upon it, or you have a higher chance of acting upon it at their conversion level. So normally in, in back of the 2000s, you, you would kind of, if you wanted to buy a product, you'd go to a store and buy a product. It was the world of, of, of retailers. And then it turned into the world of brand.

You became loyal to someone like Apple, and now it's turning into the world of e commerce. This end to end customer experience, direct to consumer, as they say. But you can't act if you can't remember. You can't remember if you didn't engage, and you can't engage if you can't experience. And a lot of our digital platforms tell us how we behave.

Did we click on it? Did we buy something? How much? They also tell us if we entered the, the experience and how long we spent there, or even where we're looking and, and clicking on, on the screen. What they don't tell us is though why people are doing what they're doing. How did I feel about it? And what type of body language and human behavior do I exhibit during the experience?

And this is what marketers and CEOs use to optimize their experience and strategy. So we broke that down and put it into a technology stack using artificial intelligence and parts and frankly using just good old form and coding [00:05:00] surveys to be able to start asking questions and capturing human data in a curated way that it was machine learnable against performance data.

So this is when we talk about the human experience platform. This is the type of data that we collect here and we got experience. Identity, who you are, attention, where you're looking, what's capturing your attention, emotion, what does, how do you feel about it, what's engaging, memory, did this improve or, or, or increase or decrease your memory, and your affinity towards a brand or, or towards whatever the activity is, thought, how'd you feel about it?

Tell us, how did you process that and logically feel about it? What did you do as an action? And then of course, how did that drive revenue or how did that drive learning or how did that drive safety? whatever the final metric might be. So we came into three considerations for human artificial intelligence, that we've learned over this time in the seven years.

But we come across these all the time and they're constantly at the core of our business. So today is more about sharing a few war stories on why these considerations exist and why they should exist with anybody building an artificial intelligence solution for the future. So first one is individual versus universal, second one is open versus closed, and the third one is people versus profit.

Let's go into individual versus universal. In a lot of the artificial intelligence capabilities, when you're building these types of recognition systems, or when you're looking to apply them, you're taking some sort of algorithm that's been limited by some sort of training set. And the transferability of that training is dependent on what the use case is, how similar it is to the training set, and of course, in other cases, how well your algorithms can learn and adapt.

In the emotion space, for years, psychology and science was very much built around this idea of universal emotion classification. Everybody who smiled was happy. We weren't seeing that. We weren't seeing that at the very beginning, and so we had to start doing a lot of studies around how do we build much better recognition.

And not only were we not seeing that, we were seeing a lot of misfires. There was a lot of, false positives and false negatives going on in that people would appear very sad, but they weren't actually quite sad. In fact, at half the time, they were smiling. So, so why were they registering sad? And so we had to ask these types of questions.

Ultimately, about five years later, there was a really good paper published by Dr. Lisa Feldman Barrett reviewing a thousand different approaches, in academia around universal facial expressions, not finding any consistency. for listening. Which proves kind of our internal data set where we found and had already refactored towards an individual based approach.

But here's how we would test for something like that. We work with people like Bear Grylls to be able to show the world a very defined emotional piece of content. Which I'm going to play for you here.

Ready for this?

Oh my goodness,

this one has been living in there. A very, very long time. I'm not going to need to eat for a week after this.

Pound for pound, insects like these contain more protein than beef or fish. They're perfect survival food.

Matt Celuszak: So as you can see there, I have quite a disgusting video. And that whole emotional state was a more humorous disgust. What's fascinating, and I've used this video a number of times for demonstrations, people react differently.

So if you're watching this with somebody else, take a look at how they react and think about how you're reacting. Did you cover your mouth? Did you lean back and smile? Did you look disgusted or fear? These are all different reactions that everybody has to this particular type of content. And they're all equally valid because they're all based on the individual.

But what it more exposed was on the false negative side. We put this head to head against a big tech algorithm that is out there on the market today being used across a number of different, industries in a universal capacity. And ours is in blue, which uses an individual approach, and theirs is in orange using a universal approach.

And what you can see here is Real world data, first of all, is quite messy. It doesn't look anything like your training data from a lab. So you have to get out there and get real world data.

So in this case, if there's an orange box that appears. That's the big tech algorithm. The [00:10:00] blue box is an individual algorithm that we've created. So simply by going from individual, from the universal approach to classification, we're able to eliminate a number of issues. Another, another big one, which unfortunately caught me by surprise.

I was doing a meeting with, the largest, media company in the world, with their senior executives on their news side. And, and we were going on the room and I was demonstrating the technology and each individual person was trying it out to see how it read their face live. And, that was the first time we learned that, computer recognition systems don't do very well with black skin or dark skin faces.

I can assure you, it was the most embarrassing moment of my life. And, as a scientist and, and the way that we look into our stuff, we went to try and fix it and learned a number of things. And, It was really nice to see in this new data set that we were able to create being able to crack that challenge.

The challenge is actually one of both classifications are often skewed towards upper class or wealthier societies and, and often skewed towards academia, in which case you're dealing with very, very biased training data sets. So if you're going to be building artificial intelligence for a better world beyond, it has to be inclusive and you have to think about it that way.

And so we're quite happy about how this one turned out. And that again, orange would be, so you're seeing red here, red means that there was no recognition at all, and orange would be when the standard algorithm that's used by a lot of the world today. We'll be using it and they wouldn't even find a face inside this video.

So now you've eliminated people with beards and you've eliminated dark skin people. So you've got rid of about, you know, a third to two thirds of the world. So then the next question is what happens with people who don't have full, really high quality video coming at you as well. And this one has to, this video had a pretty low bit rate.

And again, what you're seeing is very, very low recognition of even finding the face. So if you're looking at computer vision recognition systems, yes people love to advertise, it's huge, it's scalable, but you got to get real right into the details. So in this case, That big tech algorithm head to head just wouldn't even perform on more than 10 percent of the population.

So the challenges that you have around when you're thinking about individual versus universal building artificial intelligence systems is real world data is messy. So if you're going to train anything and you want an algorithm that's usable, you want to apply it properly and have it be usable across more use cases, you have to worry about the trainability and transferability.

So you need to get out of the lab and train on real world data. get into the use case. For us, we learned that universal classifiers and emotion are not applicable universally. And there's two ways to solve this. One way is very much to boil the ocean. Try and measure everybody on the planet and therefore you'll be able to build an individual algorithm for everyone on the planet or at least classify people by their cultural sets.

That's making a huge assumption around cultures. There's been a lot of international travel for many years. Living in the UK, living in Canada, we've got two of the most diverse countries in the world. And yet, how can we possibly say that a male 34 years old is the same as another male 34 years old? I think we have to be really careful with those types of algorithms.

And then the first, the final one is. What is happy to one person is not happy to another. Everybody operates differently. We talk about this in stereotypes. Brits don't smile. Americans are over happy. But when we actually did a global study around this, it wasn't isolated to the country you were from.

In fact, there was no correlation to that. It was very much clustered by people who were just more emotionally expressive versus those that weren't. So the key here was, was how do we baseline against an individual? And ultimately on this one, it's about then taking it to the edge. We'll talk about privacy in a moment.

The second thing that came up in our company, that's a guiding principle that we find very interesting when building for tomorrow for a better human based tomorrow is this theme around open versus closed. We took a, one of the challenges with building proprietary IP is that everybody gets caught up, particularly as a founder, your shareholders, your, your employees all get caught up on.

You got to keep, you got to keep the secret sauce behind the cloak. [00:15:00] And we've actually found quite the opposite. We kept it behind the cloak for a while and weren't able to drive a lot of revenues. And the moment I started, I started hosting what I call the wood panel and wine rooms. And basically I just rented the top floor of a British pub and we hosted people around.

It was a round table session where we'd have about 15, 20 people. And what we would do is we would simply just talk about, here's the big issues that we want to solve. And we have something called Chatham House Rules, which means that you can talk about anything you want, and you can leave and express some of the ideas, but you can't reference who it came from.

This allowed us to create an open environment to talk to, people who were in competing businesses, competing CMOs, and lay out the real challenges that they're facing. It validated our product proposition and our product market fit, but it also opened up a lot of business for us. And I think what the interesting thing about artificial intelligence is, There are a lot of people who know a lot about AI, but when it comes to artificial intelligence and applying it properly in a business, it has to be configured to your business.

And ultimately, it's a people solution. So if you are going to serve artificial intelligence to your customers, and that's going to be a value proposition of your business, whether you're building it from a core level of, of the, how do you make CNNs better, and, and provide improvement there all the way through to the application of it.

You have to consider who that end user is, and ultimately it's about empowering them. AI is a tool. It's a means to an end. And we found that by opening up, we were able to open up a lot of things and it raised a lot more concerns for us that we were allowed to address early on, which put us in a strong position when GDPR hit.

So for us, the first bit was seeing is believing for a lot of people. So showing them the science and more importantly, talking about these stories that we're sharing with you today, talking about the pain points, Yeah, when we were using support vector machines on a universal classifier, we were misfiring more than we were firing.

It was not great. And you can imagine that, you know, similar to like launching rockets, first bunch of crash and burn. And so it was a little bit tough. So we had to open that up and foster the discussion. And it only helped us build a much stronger client base. and a much stronger growth path for our business.

So make your science accessible. It's my first recommendation on that one. And we live by that now. Smart people have good ideas. Another bit here was by bringing that, that, that round table together, you foster really, really good ideas. We now do this virtually using a mirror board. It's fantastic. It's a great, great tool.

And you just get online and there's a, there's a really good template from Disney, you know, getting people into a dreamer state, realistic state. and then into a critic state and be able to pick apart really good ideas. But by fostering that open culture, you get much better results and much more diversified and inclusive results.

The other one is about AI impacting everyone. If you're going to build an artificial intelligence system, we learned very quickly that actually our customer is not just the business we're serving for market intelligence. or for improving education, it's the student whose education and frankly their grades rely on being able to get taught properly.

So it's about making that accessible for everyone and that can be done in a number of ways technically speaking but also just again from an education standpoint. In the artificial intelligence realm you're going to be the expert so make sure that you help people understand that and be open to the discussion and be open to being wrong.

And having to change your view. The other thing for us when it comes to computer recognition and vision technologies is consent is not a barrier. It's not something to be overcome. It's a way of life. Accept it. Be part of it. Give people a good reason to engage with you and your artificial intelligence.

Artificial intelligence is scary for a lot of people. But what we've learned over the last seven years is When we started explaining our consent and giving people the option to opt in to, for research, or opt in and not ever be seen by a human being, strictly be using the algorithms, or not opt in at all, we actually saw a 13 percent opt in rate and 80 percent of people who opted in actually opted in for research and further, further use.

What was interesting about that is over the last seven years in the market research field, that opt in rate has already grown to about 26, 27 percent. So we're seeing people opt in where they see value. And I think that you have to understand the value exchange with the individual if you're going to serve an organization.

The final one that we're going to talk about is people versus profit. And I almost lost my company over this one. I was chasing, one of the largest sports broadcasters in the world, and they had a really, really [00:20:00] cool project that I've always wanted to do. I've always thought, you know, poker players, they don't have a coach kind of like, kind of like track all stars and athletes do.

So when I was in human kinetics, we would use VO2 max monitors and chest monitors to be able to understand and anticipate performance coming down. For for long distance runners. And, in this case, it was sports broadcast and it was about how how do we monitor, you know, some of the sports and some of the pro athletes out there.

The Tiger Woods is Roger Federer is those types of people in the world. How do we monitor the performance of what emotional state they're in? So we can feed that back. And it was a fascinating, challenge. I can tell you the amount of tonnage going through our algorithm was obscene. This client would have been more than a million pounds a year, and frankly would have made us as an organization.

We would never have had to look for money again. And so we started to get into scoping and it turned out though that they were going to repurpose the information to be used to improve betting odds for betting houses. We liked the use case for mental capacity with athletes. It didn't feel right to be able to manipulate people.

In that sense, it's a very powerful piece of technology. And you have to be really, really careful with that. So for us, we actually had to say no. and scrounge. We had to scrounge, we had to take pay cuts and we had to figure out how to hit the next milestone. That was two years ago. So, we're here and we're growing and, and I have to say I'm very proud of that.

We're very proud of that aspect. So when you're thinking about people versus profits, that's the one that set us along the side of ethics. But I've also seen a lot of, a lot of talk and a lot of attempt at implementing an ethics committee. Yeah. Ethics have to be owned at the decision level. Yes, a committee is good for having a body there and demonstrating publicly that it's there.

But a true organization is going to have ethical value sitting within the character development and hiring of their people. And I think that has to fundamentally sit with the responsibility of the CEO. And, and it has to be in a, in that individual personal conflict with driving shareholder value, particularly today and particularly with the new markets that are coming up.

If you don't have relevance in the world tomorrow and you can't prove that to the markets that are going to be using your stuff, I think you're going to have a really tough time even realizing your long term profits. The second bit around it, it was around just nobody wants a 4 million euro fine.

GDPR was a right, right, just kick in the right direction, in our view from a people measurement standpoint. I, I consult a lot with the UK government and the around how to direct the digital guidelines. Around privacy. And for us, the real challenge for a business and for you if you're you're building an artificial intelligence business is how do I create something that is universally applicable so I can have one set of terms that are maybe localized a little bit, but also be able to transfer the types of rights and ethos that we want to to the individual.

And so for us, we just took a really hard individual approach. It didn't only comply with GDPR, but we're also a Canadian company as well. So we had to comply with GDPR. with PIPA and some of the Canadian practices as well, and South Korean practices, which just got rewritten. And we've decided that rather than try to just comply with guidelines that are out there, Really think the problem through from an individual perspective so that if it ever landed in court, if the individual rights are always put before the organization, and it's gonna be pretty hard to prove that you're in the wrong and the wrong side of data, privacy and protection.

So that's how we handle our data privacy. And, and, that again was a hard learned lesson. Going from, going from a, you know, we could use all the data we want to, we actually had to delete. We had to delete over 2 billion data points. Because the consent wording wasn't exactly up to snuff the way we wanted to the earth from in the early days when GDPR came in.

So it was a hard lesson. And then the final bit is a question of the impact. I think the biggest thing that I'd like to leave you with is saying no to get to the right yes. We found this to be really, really incredible tactic internally and externally of helping us find really good clients. We put our clients through a KYC process.

They need to understand the impact of the data that they're having. And, and, and that they're using. And so when you're building for tomorrow, it's really taking a partnership approach and, and not being afraid to say, no, yes, you're going to be the mouse on the shoulder of elephants. [00:25:00] That's fine. But you're also the expert.

And if you're that expert, you're truly that expert, you'll be able to define that expertise and they'll respect that expertise. So say the right no's to get to yes, to make sure that whatever you deliver is going to be a really, really solid solution that benefits everybody. It will be impacted. So I think that's all I have for you to share with you today.

Thank you very much for inviting me over to crossover AI. And if you have any questions whatsoever, just fire them over into the chat. I really appreciate it. And we're on this massive human journey for us. If we can start to fully understand the human experience in our digital interactions at home, at work, at play, learning, shopping, then I think we can start to create a digital world.

that actually respects the quality of life and the quality of time. Thank you very much.